Unit 8. Proximal Policy Optimization (PPO)

Introduction

Improves agent’s training stability by avoiding policy updates that are too large by clipping the difference between current and old policy to a specific range . Ensure training is stable.

Intuition

- Smaller policy updates during training are more likely to converge to an optimal solution.

- Too big update can result in falling “off the clip” and takes a long time or even never recover.

Introducing the Clipped Surrogate Objective Function

Recap: The Policy Objective Function (with A2C)

: Log probability of taking that action at that state.

: Advantage function.

Problems:

- Too small, training process slow.

- Too high, too much variability in training.

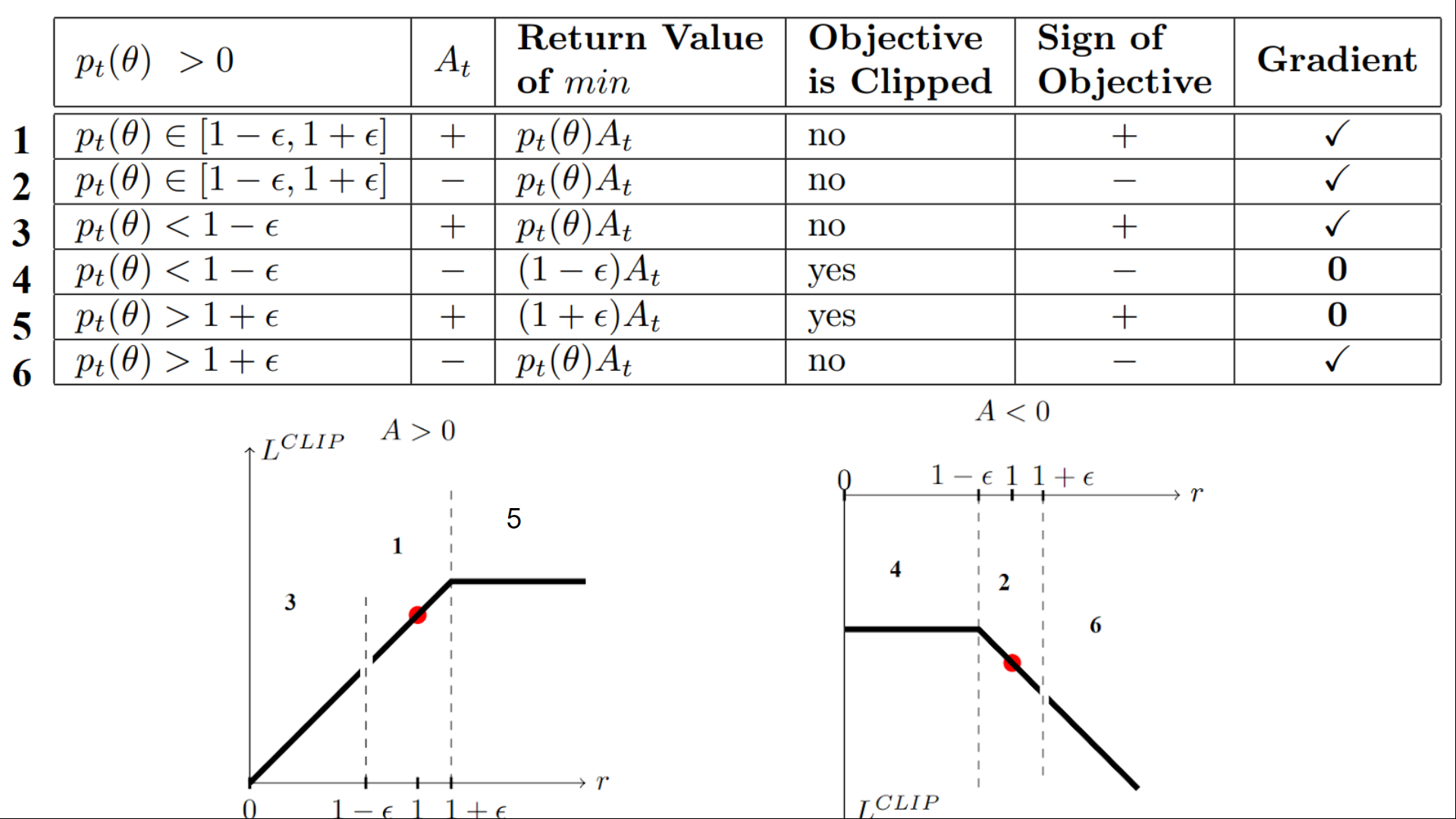

PPO’s Clipped Surrogate Objective Function

.

The ratio function

.

- If

, the action

at state $s_t$ is more likely in current.

- If

, less likely.

This ratio can replace the probability we use in the policy objective function.

AKA, .

The unclipped part

.

To clip the ratio so that we limit the divergence of current policy from the older policy.

- TRPO(Trut Region Policy Optimization) uses KL divergence constraints outside the objective function. Complicated to implement and takes more computation time.

- PPO(Proximal Prolicy Optimization) clips probablility ratio in objective function. Simple.

The clipped objective

.

is a hyperparameters. In paper it is defined as 0.2.

- Unclipped, normal return, normal gradients.

- Clipped, clipped return, no gradient, no updates.

- If

and

, it means we stop aggressively increase a probability of taking the current actition at that state.

- If

and

, it means we stop aggressively decrease a probability of taking the current actition at that state.

- If

Leave a comment